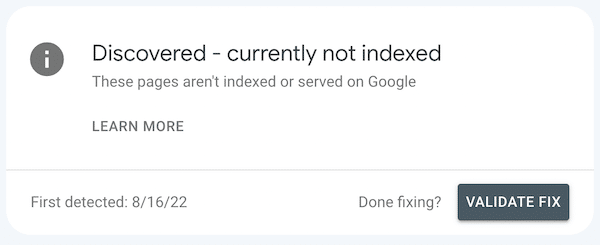

I once checked my website’s sitemaps submitted on Google Search Console and found the error “Discovered – currently not indexed.” It means Google knows about these URLs but decided not to index them. Here’s a snapshot of how the error looks on the search console.

Before I tell you how to fix the “Discovered – currently not indexed” issue, you need to first understand the reasons behind the issue. There could be several reasons why Google decided not to index these pages. Some of the reasons could be:

- The page has low-quality content or duplicate content.

- Disallow tag in the robots.txt file that may have blocked Google from crawling all the pages of your site.

- Poor Internal Link Structure

- Crawl Budget Issues

- Unimportant Redirects

First of all, copy the URL of one of the pages and click the Test Live URL in Google’s URL Inspection Tool to check if the page can be indexed by Google. It will tell you if you have blocked the page from indexing by noindex tag. If everything looks fine, we can think of other ways to fix the website.

If there’s a “noindex” meta tag, remove it. If robots.txt is disallowing the index, fix that. If these things are fine, you need to check the quality of the content. Content quality plays an important role. If Google doesn’t find content interesting, it may decide not to index the web page. So, you need to make sure your content is up to date and meets Google’s quality guidelines.

For Google, outdated content, auto-generated content, user-generated content, and duplicate content is the low-quality content. You need to make sure your web page is fine. If there’s low-quality content, fix it and then resubmit the pages to Google.

You also need to build high-quality backlinks to the page from other pages of your website and other reputable websites. It will increase the authority of the page and help search engines discover the page faster.

Also make sure these pages are easy to find from the sitemap. A sitemap guides the search bots to all the pages of your website to make sure all important pages are indexed. If your sitemap is not optimized, it can negatively impact your crawl budget and Google may miss your important content.

You also need to make sure your website is loading fine. If your search is slow and responds slower than expected, Google may decide not to index the page. Google will attempt to but indexing may further get delayed if it again finds the website slow. I recommend you check the Crawl Stats report in Google search console and see the Average response time. If the website is low, you need to fix it. You can reach the Crawl Stats report in Search Console by clicking Settings in the sidebar and then clicking Crawl stats.

In the end, you also need to look at the structure of the website. Your website’s content should be divided into categories/sections with unique text. It should link relevant sub-pages properly. It allows Google easily navigate your website properly and find all the pages. The content plays an important role, so make sure you are using the right content.

Leave a comment